TLDR

We argue that frequent batch auction-style FCFS should be adopted in order to make the fairness notion more robust and welfare-maximizing (in sense of providing better UX and making the network long-term incentive aligned with the correct parties).

Specifically, we suggest that the “fairness granularity,” the interval during which all transactions received by a node are assumed to have happend at the same time, should be set to an interval of at least 500ms (conservative lower bound, will elaborate later).

This means that instead of reporting a strict ordering (by receive-order time) of transaction, each individual node report a partial ordering to the leader of the ordering consensus protocol. And after aggregating each individual nodes’ preferences, the leader gets a weak ordering (by FCFS) with unordered batches in it (caused by condorcet paradoxes and the initial partial ordering). Now the leader tries to resolve the order of the unordered batch using auctions (e.g., using coinbase.transfer).

We use FBA-FCFS (frequent batch auction first come first serve) to refer the the proposed ordering protocol.

We show this proposal is better than the original non-FBA version of FCFS in two ways:

- makes the fairness of the ordering more robust (in the sense of network latency tolerance) than the vanilla version, allowing the domain to provide a smoother UX

- more fair in the sense that it removes the negative externality caused by inefficient resource allocation and increases the welfare (an upper bound on ordering efficiency) of users, thus allowing the domain to attract much more future users (who aren’t visible currently)

We also address potential concerns of this proposal:

- FBA-FCFS incurs negligible latency overhead

- FBA-FCFS validators has no incentive to coarsen the fairness granularity

Assumption

First, we list some assumptions currently made by existing FCFS proposals (note there are no new assumptions):

- the system has a permissioned mempool that is only visible to the validators, and the privacy on top of the permission is trusted at the moment

- anybody is able to submit a transaction, the transaction is assumed to be broadcasted to all the validators at the same time

- the validators are geo-distribtued well enough

- there exists some social norms that can, with good enough accuracy and efficiency, slash dishonest validators

- there exists network latency services that allow VIP agents to broadcast transactions to every validator faster than non-VIP agents, allowing them to be ordered first in vanilla FCFS with decent probability

We define dishonest as either of the below:

- the validator violating the privacy assumption of the mempool and leaking transaction contents to sophisticated parties who can gain an ex-post knowledge of the transaction sender’s private information before finality.

- the validator manipulates the ordering of the transactions in a way such that transactions (not in the same batch) received later is placed before transactions received sooner.

We give some examples of activities that are impacted by ordering rules:

- sandwiching: requires the validator to both violate privacy and receive-order honesty

- generalized frontrunning: requires the validator to both violate privacy and receive-order honesty

- liquidation/arbitrage/NFT sniping: doesn’t require the validator to violate any honesty assumptions

- market making/statistical arbitrage: doesn’t require the validator to violate any honesty assumptions

It is clearly desirable to eradicate activities 1&2 and democratize activities 3&4.

Robustness

First, we want the ordering protocol to level the playing field between privileged and unprivileged users (who might have worse network latency). Specifically, with reasonable network latency assumptions, the fairness granularity should never be zero (or else, priviledged users will always win present preference conflicts) to allow for a smooth/fair UX for all users.

More importantly, we know that there exists a huge incentive for sophisticated users (i.e., MEV searchers, validators) to manipulate a vanilla FCFS system (violating the receive-order honesty) without incurring much risk.

This imposes a great risk to the long-term stability of the protocol because players cannot be trusted to be honest in an environment where they are encouraged to break the honesty assumption with minimal risk: optimal latency and ordering manipulation is always achieved by colocation with validators, which encourages vertical integration between validators and searchers which hurts the overall decentralization of the system.

On the contrary, if a FBA-FCFS system is adopted, the validators can be trusted to be honest as all the centralizing/dishonest vectors have been contained in the auction, making the protocol long-term stable (as we all know, only with sufficient honesty/security of a network can the domain gain significant traction of future users). Honesty assumptions and incentive aligenment has to go hand in hand.

We observe that from the four examples, 1&2 requires the validator to break both the privacy and receive-order honesty assumption. Thus, to strengthen our ordering protocol, we need either (i) opt-in privacy, through threshold encryption and mechanism similar to cr-list, or, (ii) rely on the social norm slashing mechanism to discover privacy violations (which is assumed in the original vanilla FCFS anyway, and this behavior would be very easily discoverable by any data services, txn scanners, or block builders).

This means that with privacy components in the protocol, we can indeed eradicate 1&2 while making the fairness more robust. Of course, the tradeoff here is that in the vanilla version of FCFS, validators can violate the privacy assumption and still won’t be able to conduct activities 1&2 efficiently, while in FBA-FCFS the validators can just violate the privacy guarantees and conduct 1&2 effectively. Note that this tradeoff is mitigated (on top of the two methods mentioned in previous paragraph) because:

- as more application layer protocols realize the importance of MEV-resistant design, activities 1&2 will be eradicated at a higher level in the transaction supplychain. Ordering layer is not the best abstraction layer to solve the negative externalities of non-private transaction MEV.

- public mempools are generally unseriable (as demonstrated by various pre-trade privacy/zk pushes), in future we will see more privacy solutions

Fairness

The acitivities in case 3&4 constitues a significant portion of all on-chain acitvities. And currently, most on-chain activities are (systematically relevant) DEX activities. In fact, 65%+ of Uniswap volume is from (statistical) arbitrage, and among the other 35% it’s a few big market makers. Thus, it is safe to say that, in majority of the time, there exists a conflict of preference of agents as they all want to capture this permissionless MEV opportunity, i.e., they have common value over the resource of “what the next on-chain state should be.” We breviate this resource to spec-on-state, which means which specification should the next state satisfy (which is sometimes abstracted in the notion of a “blockspace” and its attributes).

Our goal, then, should be to minimize the negative externality cause by the allocation of this resource of spec-on-state within burst periods (which occurs extremely often).

We observe that vanilla FCFS incurs much more negative externality than FBA-FCFS because sophisticated agents (e.g., MEV searchers) are encouraged to both spam the network and play a latency auction (whose endgame is spinning up multiple searcher bots instances distributed across the network to capture opportunities, which is also spamming). This is primarily caused by the fact that agents in the network have no way of expressing their preference and thus enter a stage of uncoordinated coordination (auction by other means). This means the allocation has many undesirable properties:

-

the sequencers/proposers/validators have inherent huge advantages because they can be the first ones to see the transactions and therefore the first to react (also the colocation is perfect so have very low network propagation latency). This implies that they are more likely to collude with sophisticated users and thus creates centralization in sequencers/proposers/validators, which harms geographical decentralization, network security, censorship resistance, and liveness.

-

the allocation probably takes the form of an all-pay auction (as in the case of most latency games, where backroom deals are signed/AWS instances are colocated in an uncoordinated manner) which makes the network-wide welfare decrease. And this costs will always be channeled by sophisticated users to unsophisticated users in the form of a less efficient market and worse UX. For example, market makers will charge a larger spread to retail users because they have a higher operating cost, as shown in traditional HFT, or that arbitraguers will not move the price of a pool because it costs them too much to do so, thus forcing users to trade in unfavorable out-dated prices. In the end, the permissionless economic value will be captured by some party, it could be captured by Amazon/Google who are neither incentive aligned with the Arbitrum ecosystem nor the crypto community, or, it could be captured by users.

-

the allocation mechanism is inexpressive, in this case, the agents have no mechanism of expressing their preference for activies (which sums up to 90% of the network activity), which means there is huge inefficiencies caused by Price of Anarchy. For example, in spam/randomized ordering auctions, the validators are forced into a more severe transaction quality trilemma, which imposes a direct tax on users. Essentially, the inefficiency, in equilibrium, equals to the amount that normal users are charged (compared with what they would’ve been getting in FBA-FCFS case), driving users away.

-

users are inable to have their true preference aggregated (because the mechanism/ordering protocol has only partial information and has no clue of how much money the agents spent on latency optimization), this also means that the MEV is internalized by latency services, whose entire business model is encouraging more MEV extraction and isn’t incentive aligned with Arbitrum in the prosperity of the domain and its long-term value accrue/user acquisition. Not internalizing the auction value to the domain also means that there exists no way of future re-distribution of the value, which has been shown to be damaging to the security of the network.

-

the game is not transparent, thus raises the barrier of entry, favoring sophisticated users over ordinary retail users more. This would make activies 3&4 more centralized and therefore the profit to be more centralized.

On the other hand, if we use a direct allocation mechanism (e.g., using coinbase.transfer) during those burst periods, we circumvente all five disadvantages of vanilla FCFS listed above while still retaining the benifits of low latency fast finality receipts and fairness.

Based on data in traditional markets (shown above), we know that the burst period in one pair of assets in the crypto market can be conservatively modeled (lower bound) as coming in every 500ms and lasts around 200ms. And since crypto has many frequently traded pairs with meaningful volume (relative), the price discovery happens much more often.

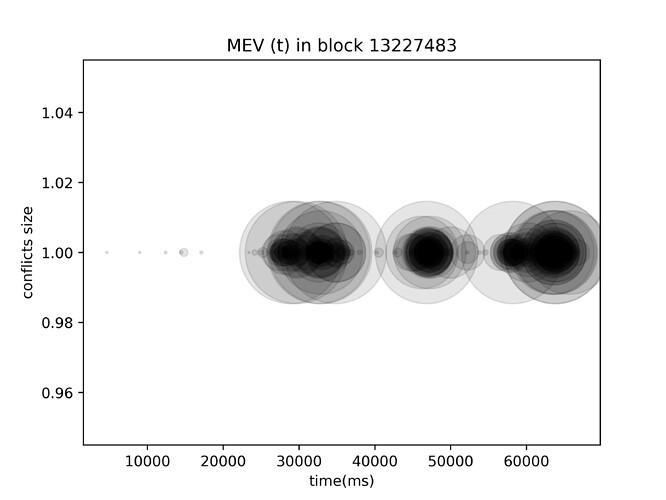

In fact, we found that in aggregation, the burst period on Ethereum takes around 1245ms, and 75.37% of the conflict of preferences happen in 4.115% of the time. For example, the burst period in block 13227081 consists 96.38% of the MEV load while only taking up 3.53% of the block time (640ms). Similarly, the burst period in block 13227483 took 922.5ms: it summed up 91.614% of the MEV within that block during less than 5.6% of the time (the diagram of MEV as a function to time is shown below). This means that if the same situation were to happen on vanilla FCFS, then, compared with FBA-FCFS, we will be bearing the extra negative externalities (e.g., centralization, higher fees, worse execution, etc,.) from 11.92x more conflict of preferences.

This means that the fairness granularity parameter, at least, should be chosen over 500ms. Moreover, if we factor in global delay uncertainties over the Internet, the interval would be larger than 500ms up to a few seconds.

As for latency UX concerns, 500ms auctions can be achieved with negligible latency cost to users as Solana has blocktime of 400ms and full block auctions are being conducted. Also, auction need only to happen per public information reveal (i.e., at the start of the block) because that is when the burst period starts.

Note that the 500ms bound is not fixed, the shorter we make the auction interval (fairness granularity), the fairness guarantee becomes less robust: it is easier for the validators to be malicious without penalty.

Conclusion

We presented FBA-FCFS and argued why it maintains the same no-frontrunning guarantee to users, while improving UX and fairness guarantees.

by Flashbots ![]()

![]() with love

with love ![]()